One of the most popular buzz words in recent times has been Artificial Intelligence (‘A.I’) – and as fascinating, and controversial, as the development of A.I. software is, it also raises some interesting questions on IPR. In fact, in a draft report released recently, the European Parliament’s Committee on Legal Affairs calls for “the elaboration of criteria for “own intellectual creation” for copyrightable works produced by computers or robots…”

The laws surrounding A.I. and IPR, specifically copyright, are increasingly growing murkier with advancements in A.I. technology. This two-part series, therefore, looks into questions of authorship of a copyrightable work when the content in question is ‘created’ not entirely by a natural or legal person.

I must emphasise, these posts do not argue that A.I. has gained ‘intelligence’ yet, and nor does it argue that it should be given ownership of IP. Rather than engaging in questions of what A.I. will be and how that affects copyright law, these posts are a thought experiment based on what A.I. currently is, in the first post, and what that means for copyright law today, in the second post. [Long post ahead]

The Evolution of Artificial Intelligence Software

This is not a new question per se, of course. Proponents of A.I. have been arguing for and prophesising the creation of IP by artificial intelligences for decades now. The main hurdle to this has been the fact that ownership of copyright rests on the ‘creation’ of it, requiring a spark of creativity on the part of the software, while most of the software that generated content till recently did so procedurally, as per its pre-programmed instructions. The claim that there was any genuine ‘creativity’ that could be located within the software itself was, therefore, a rather far-fetched one.

Recent A.I. software, however, based on advanced Artificial Neural Networks (ANNs) – again, not technology that is quite novel, but that has benefitted greatly from recent leaps in networking and data storage & processing – is rather different. (This is based on my understanding of ANNs – if I’m mistaken anywhere, please do correct me).

ANNs are networks of software and hardware that are designed to emulate the processing of the human brain. ANNs are based on the concepts of Machine Learning, to be capable of actually adapting and, crucially, learning. The final output of an ANN’s learning process, though guided by its programming, is not coded into it. Moreover, and crucially, the creator of the program is usually unable to predict how the software will understand something, or what meaning it will give to it. The program has to be taught this information.

Recent months have seen these ANNs gain massive media coverage for ‘creating’ multiple forms of content, ranging from songs to movies, even driving cars, in Tesla’s autopilot and Google Cars, and playing Go. Google’s A.I. system has actually reportedly been recognised as the legal driver of its cars by the US National Highway Traffic Safety Administration.

This analysis focuses on one specific ANN – Google’s DeepDream. (Note how many of these public, eye-catching AIs are coming from Google’s – or rather Alphabet’s – now massive network of companies and projects.) All of the other ANNs raise fascinating issues as well, but DeepDream lends itself the most easily to the current analysis.

Google DeepDream

Google’s DeepDream was originally developed as part of an image recognition software. When a new image is fed into the program, the program analyses the same, ‘recognises’ it, and categories it. To ‘recognise’ the contents of an image, the program needs to know the meaning and context of a term. DeepMind was, being an ANN, taught the context of these images and to recognise the same independently.

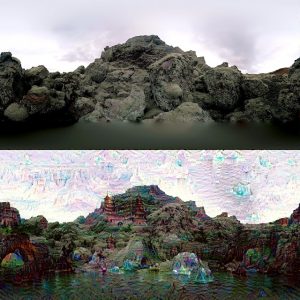

Google, then, reversed the process, using it to create ‘art’. In the ‘Deep Style’ technique, a more advanced version of DeepDream, the ANN ‘is capable of using it’s own knowledge to interpret a painting style and transfer it to the uploaded image’. You can see some examples of this process here.

Instead of asking DeepDream to recognise patterns in images, it basically fed the program an image and asked it to recognise a ‘pattern’, sometimes one that was not there, and put it to loop. That is, it gave DeepDreams an image, with or without any birds in it, and asked it to look for birds, repeatedly. The program found a pattern in the fed image that resembled its ‘understanding’ of the requested pattern, i.e., in this example a bird, repeated the process multiple times, reidentifying and increasing the size of the image, and gave that as an output. And that, as Wired put it, was DeepDream “pumping out trippy – and pricey – art”.

The creation of the work here is based on what DeepDream’s has understood about the meaning of ‘birds’, and the patterns it recognises in the images presented to it. In the illustration attached, for instance, it creates pagodas where there were none. There is, inarguably, at least a small level of ‘creativity’ located within the software itself, even if at the level of ‘derivative works’, that is not procedural, and that is not sourced from the its programmers or teachers. In fact, part of the reason for the original experiment that led to DeepDream was to try and understand how ANNs worked, and how they ‘understood’, and part of the conclusion of the DeepDream experiment was that, in some cases, DeepDream did not understand things the way it was expected to.

Notably, these pieces are also being commercialised – four of the pieces created by Google’s multiple algorithms were auctioned off in a gallery show in February this year. You can try DeepDream yourself, here (Note: results tend to vary).

So it’s quite clear that ANNs, that A.I.s, are evolving, and that they are not quite as dependent on human creativity anymore, even if they are not entirely independent of it. The next post will look at the effect this has on considerations of creativity and authorship under copyright law.

With respect to music, a second issue, beyond the status of AI as an author/creator is in play here.

In understanding music as an abstract language, it becomes apparent that there are very limited melodic and harmonic combinations possible within a 7 note (modal), 5 note (pentatonic), or 12 note (chromatic) system. An analogy could be the limited number of words and sentences possible with a 5, 7, or 12 letter alphabet.

Within this limited musical system, every possible melodic and harmonic fragment/combination has been conceived before, used in varying contexts – historically and stylistically. Ever expanding databases of public domain musical fragments may eventually be catalogued, readily accessible and used in legal defense against claims of copyright infringement. Uniqueness of integrated musical context may ultimately be the deciding factor in determining ownership/infringement vs. public domain variation.

With the existence of immense databases of worldwide historical (public domain) musical fragments, it should not be difficult to find prior examples of any basic melodic/harmonic fragments being used in the generative variation processes of AI, preexisting in the public domain.

“Copyright law excludes protection for works that are produced by purely mechanized or random processes, so the question comes down to the extent of human involvement, and whether a person can be credited with any of the creative processes that produced the work.” I wonder how the court would define ‘creative involvement’. Would this idea be extended to creative editing and arrangement of randomly generated AI fragments?

And in practice, will a distinction emerge between human creative involvement as musical compositor (constructing a final sonic ‘image’ by combining layers of previously-created/computer generated material) vs composer – as poles in a ‘creative continuum’ between arranging/editing and innovative exploration/expression?